National supercomputing resources enable research that would otherwise be impossible

When researchers need to compare complex new genomes; or map new regions of the Arctic in high-resolution detail; or detect signs of dark matter; or make sense of massive amounts of fMRI data, they turn to the high-performance computing and data analysis systems supported by the National Science Foundation (NSF).

High-performance computing (or HPC) enables discoveries in practically every field of science - not just those typically associated with supercomputers like chemistry and physics - but also in the social sciences, life sciences and humanities.

By combining superfast and secure networks, cutting-edge parallel computing and analytics software, and advanced scientific instruments and critical datasets across the U.S., NSF's cyber-ecosystem lets researchers investigate questions that can't otherwise be explored.

NSF has supported advanced computing since its beginning and is constantly expanding access to these resources to help tens of thousands of researchers each year - from high school students to Nobel Prize winners -- at institutions large and small, regardless of geographic locality, expand the frontiers of science and engineering.

Below are 10 examples of research -- enabled by advanced computing resources -- from across all of science.

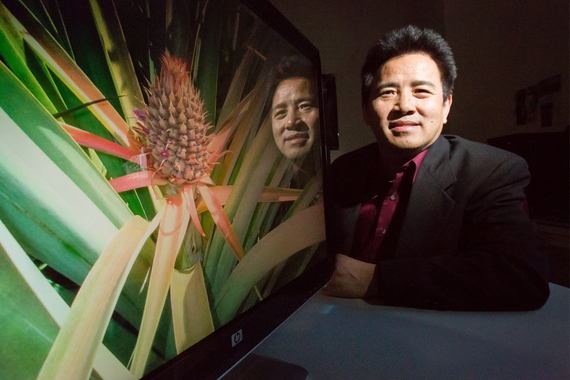

Pineapples don't just taste juicy, they have a juicy evolutionary history. Recent analyses using computing resources that are part of the iPlant Collaborative have revealed an important relationship between pineapples and crops like sorghum and rice, allowing scientists to hone in on the genes and genetic pathways that allow plants to thrive in water-limited environments.

Led by The University of Arizona, Texas Advanced Computing Center, Cold Spring Harbor Laboratory, and University of North Carolina at Wilmington, iPlant was established in 2008 with NSF funding to develop cyberinfrastructure for life sciences research, provide powerful platforms for data storage and bioinformatics, and democratize access to U.S. supercomputing capabilities.

This week, iPlant announced it will host a new platform, Digital Imaging of Root Traits (DIRT), that lets scientists in the field measure up to 76 root traits merely by uploading a photograph of the roots.

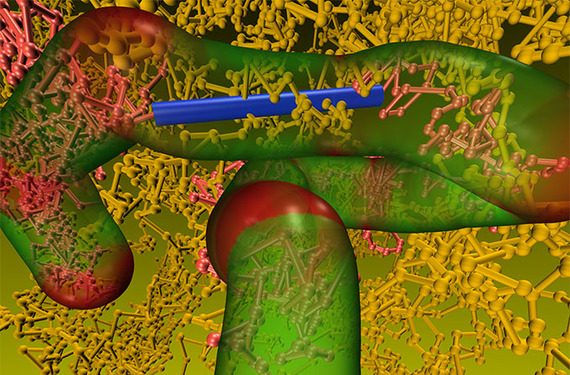

Designing new nanodevices

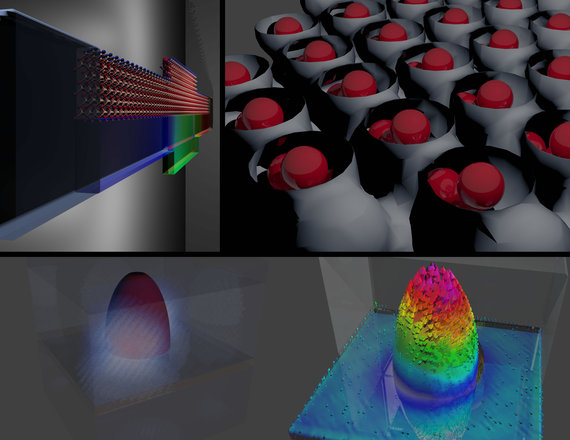

At the nanoscale, new phenomena take precedence over those that hold sway in the macro-world. Software that simulates the effect of an electric charge passing through a transistor that is only a few atoms wide is helping researchers to explore alternative materials that may replace silicon in future devices.

The software simulations designed by Purdue researcher Gerhard Klimeck and his group, available on the nanoHUB portal, provide new information about the limits of current semiconductor technologies and are helping to design future generations of nanoelectronic devices.

nanoHUB, supported by NSF, is the first broadly successful, scientific end-to-end cloud computing environment. It provides a library of 3,000 learning resources to 195,000 users worldwide and its 232 simulation tools are used in the cloud by over 10,800 researchers and students annually.

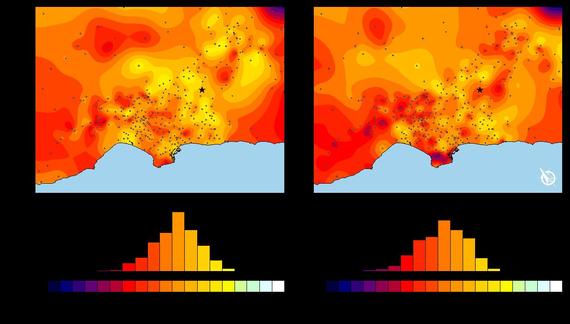

Earthquakes originate through complex interactions deep below the surface of the Earth, making them notoriously difficult to predict.

The Southern California Earthquake Center (SCEC) and its lead scientist Thomas Jordan use massive computing power to simulate the dynamics of earthquakes. In doing so, SCEC helps to provide long-term earthquake forecasts and more accurate hazard assessments.

In 2014, the SCEC team investigated the earthquake potential of the Los Angeles Basin, where the Pacific and North American Plates run into each other at the San Andreas Fault. Their simulations showed that the basin essentially acts like a big bowl of jelly that shakes during earthquakes, producing more high-shaking ground motions than the team expected.

Using the NSF-funded "Blue Waters" supercomputer at the National Center for Supercomputing Applications and the Department of Energy-funded "Titan" supercomputer at the Oak Ridge Leadership Computing Facility, they turned their simulations into seismic hazard models that describe the probability that an earthquake will occur in a given geographic area, within a given window of time and with ground motion intensity exceeding a given threshold.

Nearly 33,000 people die in the U.S. each year due to motor vehicle crashes, according to the National Highway Traffic Safety Administration. Modern restraint systems save lives, but some deaths and injuries remain -- and restraints themselves can cause injuries.

Researchers from the Center for Injury Biomechanics at Wake Forest University used the "Blacklight" supercomputer at the Pittsburgh Supercomputing Center to simulate the impacts of car crashes with much greater fidelity than crash-test dummies can.

By studying a variety of potential occupant positions, they're uncovering important factors that lead to more severe injuries as well as ways to potentially mitigate these injuries using advanced safety systems.

Scientists since Albert Einstein have believed that when major galactic events like black hole mergers occur, they leave a trace in the form of gravitational waves -- ripples in the curvature of space-time that travel outward from the source. The Advanced Laser Interferometer Gravitational-Wave Observatory (Advanced LIGO) is a project designed to capture signs of these events.

Since gravitational waves are expected to travel at the speed of light, detecting them requires two gravitational wave observatories, located 1,865 miles apart and working in unison, that can triangulate the gravitational wave signals and determine the source of the wave in the sky.

In addition to being an astronomical challenge, Advanced LIGO is also a "Big Data" problem. The observatories take in huge volumes of data that then must be analyzed to determine their meaning. Researchers estimate that Advanced LIGO will generate more than 1 petabyte of data a year, the equivalent of 13.3 years of high-definition video.

To achieve accurate and rapid gravity wave detection, researchers are using the Extreme Science and Engineering Discovery Environment (XSEDE) - a powerful collection of advanced digital resources and services - to develop and test new methods for transmitting and analyzing massive quantities of astronomical data.

Advanced LIGO came online in September and advanced computing will play an integral part in its future discoveries.

What happens when a supercomputer reaches retirement age? In many cases, it continues to make an impact in the world. The NSF-funded "Ranger" supercomputer is one such example.

In 2013, after five years as one of NSF's flagship computer systems, the Texas Advanced Computing Center (TACC) disassembled Ranger and shipped it from Austin, Texas to South Africa, Tanzania and Botswana to give root to a young and growing supercomputing community.

With funding from NSF, TACC experts led training sessions in South Africa in December 2014 and in November 2015, 19 delegates from Africa came to the U.S. to attend a two-day workshop at TACC as well as the Supercomputing 2015 (SC15) International Conference for High Performance Computing.

The effort is intended, in part, to help provide the technical expertise needed to successfully staff and operate the Square Kilometer Array, a new radio telescope that is being built in Australia and Africa and that will offer the highest resolution images in all of astronomy.

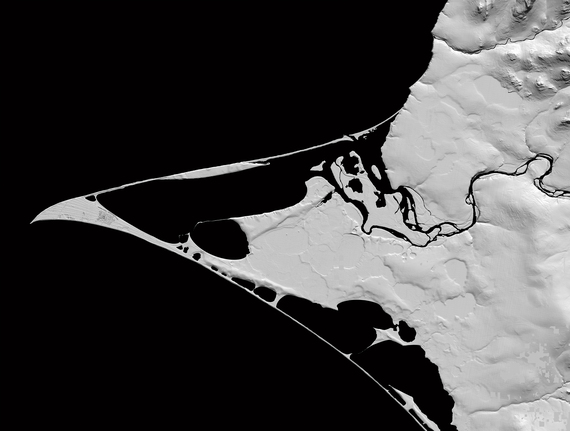

In September 2015, President Obama announced plans to improve maps and elevation models of the Arctic, including Alaska. To that end, NSF and the National Geospatial-Intelligence Agency (NGA) are supporting the development of high-resolution Digital Elevation Models in order to provide consistent coverage of the globally significant region.

The models will allow researchers to see in detail how warming in the region is affecting the landscape in remote areas and allow them to compare changes over time.

The project relies, in part, on the computing and data analysis powers of Blue Waters, which will let researchers store, access and analyze large numbers of images and models.

In order to solve some of society's most pressing long-term problems, the U.S. needs to educate and train the next generation of scientists and engineers to use advanced computing effectively. This pipeline of training begins as early as high-school and continues throughout a scientist's career.

Last summer, TACC hosted 50 rising high-school juniors and seniors to participate in an innovative, new STEM program, CODE@TACC. The program introduced students to high-performance computing, life sciences and robotics.

On the continuing education front, XSEDE offers hundreds of training classes each year to help researchers update their skills and learn new ones.

HPC has another use in education: to assess how students learn and ultimately to provide personalized educational paths. A recent report from the Computing Research Association (CRA), "Data-Intensive Research in Education: Current Work and Next Steps," highlights insights from two workshops on data-intensive education initiatives. The LearnSphere project at Carnegie Mellon University, an NSF Data Infrastructure Building Blocks (DIBBs) project, is putting these ideas into practice.

In 2014, NSF invested $20 million to create two cloud computing testbeds that let the academic research community develop and experiment with cloud architectures and pursue new, architecturally-enabled applications of cloud computing.

CloudLab (with sites in Utah, Wisconsin, and South Carolina) came online in May 2015 and provides researchers with the ability to create custom clouds and test adjustments at all levels of the infrastructure from the bare metal on up.

Chameleon, a large-scale, reconfigurable experimental environment for cloud research, co-located at the University of Chicago and The University of Texas at Austin, went into production in July 2015. Both serve hundreds of researchers at universities across the U.S. and let computer scientists experiment with unique cloud architectures in ways that weren't available before.

The NSF-supported "Comet" system at the San Diego Supercomputer Center (SDSC) was dedicated in October and is already aiding scientists in a number of fields, including domains that are relatively new to using supercomputers, such as neuroscience.

SDSC recently received a major grant to expand the Neuroscience Gateway, which provides easy access to advanced cyberinfrastructure tools and resources through web based portal and can significantly improve the productivity of researchers. The gateway will contribute to the national BRAIN Initiative and deepen our understanding of the human brain.

--

Images (from top):

Researchers from MIT and the University of Reading used the "Kraken" and "Darter" supercomputers at the National Institute for Computational Science, and "Gordon" at the San Diego Supercomputer Center (SDSC) to explore new polymers for organic photovoltaics. [Image credit: Muzhou "Mitchell" Wang and Christopher N. Lam, Massachusetts Institute of Technology.]

Plant biology professor Ray Ming led an international team that sequenced the pineapple genome using iPlant. [Photo by L. Brian Stauffer]

Visualizations of future nano-transistors with diameters 1000 times smaller than a human hair explored on "Blue Waters". [Credit: Institute for Nanoelectronic Modeling (iNEMO) led by Gerhard Klimeck]

A chart compares SCEC simulations to observations from the 2008 Chino Hills earthquake. [Credit: Ricardo Taborda, University of Memphis, and Jacobo Bielak, Carnegie Mellon University]

Simulated real world crash with a human body model using "Blacklight" at the Pittsburgh Supercomputing Center. [Credit: Wake Forest University Center for Injury Biomechanics]

The LIGO Livingston Observatory in Louisiana aims to detect gravitational waves. [Credit: MIT/CalTech LIGO]

This artists rendition of the Square Kilometer Array mid-frequency dishes in Africa shows how they may eventually look when completed. [Credit: SKA Organisation]

Digital Elevation Models like this depiction of Point Hope, Alaska, will help enhance research in the Arctic region. [Credit: Polar Geospatial Center, Ohio State University, Cornell University, DigitalGlobe Inc.]

High-school students participate in the CODE@TACC summer program in Austin, Texas. [Credit: Texas Advanced Computing Center]

Apt, an NSF-funded precursor testbed to CloudLab, is adaptable to many different research domains. [Credit: Chris Coleman, School of Computing, University of Utah]

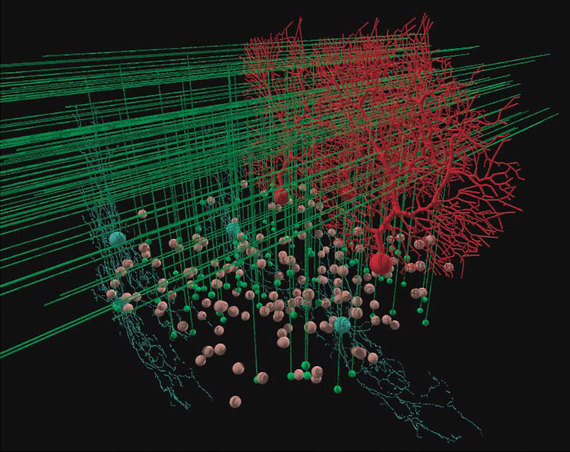

Visualization of 3-D Cerebellar Cortex model generated by researchers Angus Silver and Padraig Gleeson from University College London. The NeuroScience Gateway was used for simulations. [Credit: SDSC]