Earlier this month the Association of American Universities, a lobbying organization for elite research universities, released results of its large survey of students regarding their experience with college sexual assault. Twenty-seven universities participated. In the study, approximately one in 10 undergraduate women have been subject to sexual penetration by force or when incapacitated and approximately one in four of undergraduate women have been subjected to a sexual assault that includes nonconsensual penetration or other forms of sexual touching.

The results are broadly consistent with numerous other surveys. And once again various writers have criticized the results by questioning methodology. Questioning methodology is always appropriate, of course -- when it is done objectively.

As my colleagues and I have noted in other venues, the AAU survey was flawed in certain ways. But many critics, seemingly unwilling to believe that sexual assault can be so widespread, raise only questions that suggest an overestimate of the rate of campus sexual violence. That is understandable as an advocacy tactic. But they would be more believable if there is some basis for the criticisms that are made.

Emily Yoffe in Slate is among the latest to disparage studies showing high rates of sexual assault. Yoffe and others present two main arguments for why they think the AAU survey has produced inflated estimates of college sexual violence: (1) a too-broad definition of sexual assault that included too many types of touching for the one in four estimates and (2) a too-small response rate to the survey which necessarily means victims were overrepresented.

These are both troubled arguments. Regarding the first issue, I will be very brief in this context and just make two observations. First, it is important to note that the definition debate is not about penetration estimates (one in 10), but rather about estimates of the more inclusive category of sexual contact (one in four). Second, when it comes to the definition of sexual assault, I agree that one can question the decision to include in sexual contact figures various sorts of non-genital touching. But just as importantly one can also question the decision to exclude from both the one in 10 estimates for perpetration and the one in four estimate for sexual contact, cases where the perpetrator did not use physical force or incapacitation but rather relied on verbal coercions and/or failed to get consent and/or failed to heed verbal refusals to initiate sexual contact. While events involving these tactics were measured and reported on by the AAU, they were not included in the widely publicized estimates. Thus, the category of sexual assault that was publicized may be too broad in one sense and much too narrow in another.

I am even more troubled by the second argument made by the critics -- the one regarding response rate. The rest of this essay, therefore, will focus on the question of whether victims were overrepresented in the final sample due to the low response rate.

Critics like Yoffe don't buy that AAU's survey provides a reliable picture that one in four female undergraduates experience sexual assault or misconduct -- they say it's inflated. The primary evidence they cite is the low response rate from those who were offered the survey. The argument is that, despite collecting 150,000 responses, the low response rate (overall, 19 percent; for female undergraduates, 21 percent) suggests that victims are likely to have responded and non-victims not. What evidence does Yoffe have for this argument?

To a scientist, response rate by itself is not the most important issue regarding the quality of the sample. The more important scientific issue is generalizability of the sample. There are many threats to generalizability, some of which the AAU attempted to correct by using demographic variables to weight prevalence estimates. But the primary threat that gets discussed by critics assumes there is some kind of self-selection due to motivation. The discussion of this has been remarkably one-sided, assuming that those who were sexually assaulted are the ones more likely to fill out the survey -- and thus having the effect of inflating the estimates.

But an equally plausible self-selection concern is that those who were sexually assaulted are more likely to avoid the survey. In fact, those of us who research and work with survivors of sexual violence know that avoidance is a hallmark of post-trauma response. The pundits, however, only worry about one sort of bias. They essentially claim low response rate equals a disproportionate number of victims in the same. This claim is fundamentally what we call in science an empirical question. What does the empirical evidence have to say about this?

All of the 27 universities that participated in the AAU survey have posted some or all of their survey results online. (This is a bit of a happy surprise given how secretive the project seemed to be when it started.) The public posting of the results by schools allows us to ask the question of the AAU data: (Links to all 27 individual reports can be found in this article.)

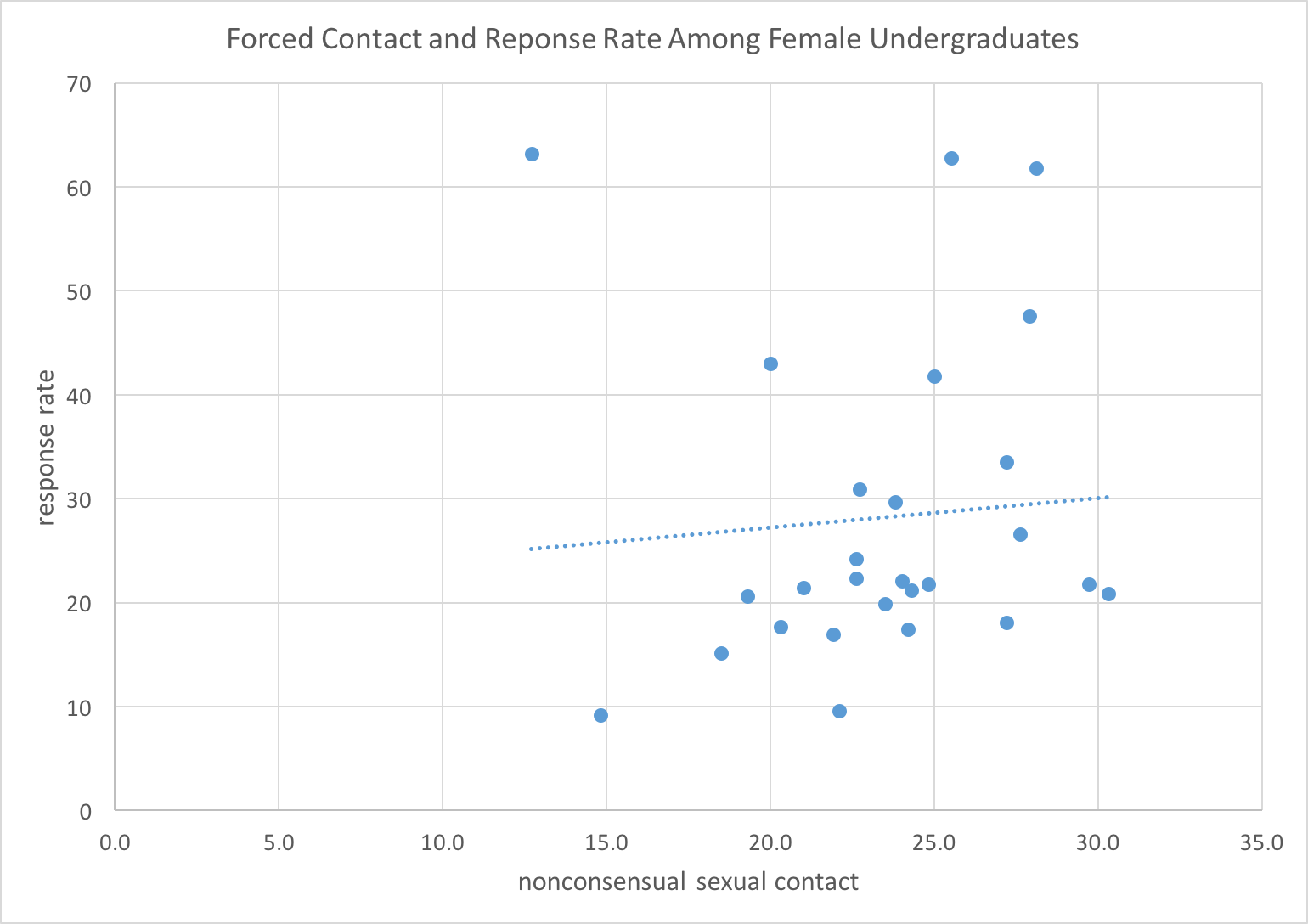

The response rates varied considerably between institutions (from a low of 9.2 percent to a high of 63.2 percent). There was also variation in estimates of sexual assault victimization (for penetration with force or incapacitation the rates varied from a low of 5.7 percent to a high of 14.5 percent; for nonconsensual sexual contact with force or incapacitation the estimates varied between 12.7 percent and 30.3 percent). But are response rates and victimization rates correlated with one another?

If Yoffe and the other critics are right we should see that as the response rate goes up, the victimization estimates go down. What do we actually find? We can ask whether the most publicized victimization statistic -- the rate of female undergraduates indicating they experienced nonconsensual sexual contact involving force or incapacitation -- is correlated with response rates for female undergraduates. If there is a systematic bias, such that higher response rates lead to lower or higher estimates of sexual violence, we might expect to see that in this relationship. However, the data paint a clear picture of no significant relationship (although trending slightly positively such that higher response rate is associated with higher estimates - the opposite of Yoffe's claim; with all 27 schools considered r=.08, ns).

Figure 1 shows the value for each of the 27 schools plotted by response rate and sexual contact rate for female undergraduates. (A comparable analysis comparing female undergraduate response rates to nonconsensual penetration experiences reported by female undergraduates produces a correlation of r =.01, ns - in other words no relationship at all...)

Figure Caption for Figure 1: Scatterplot of AAU survey response rate by estimate of rate of nonconsensual sexual touching for 27 institutions. The trend line depicted is based on data for all 27 schools.

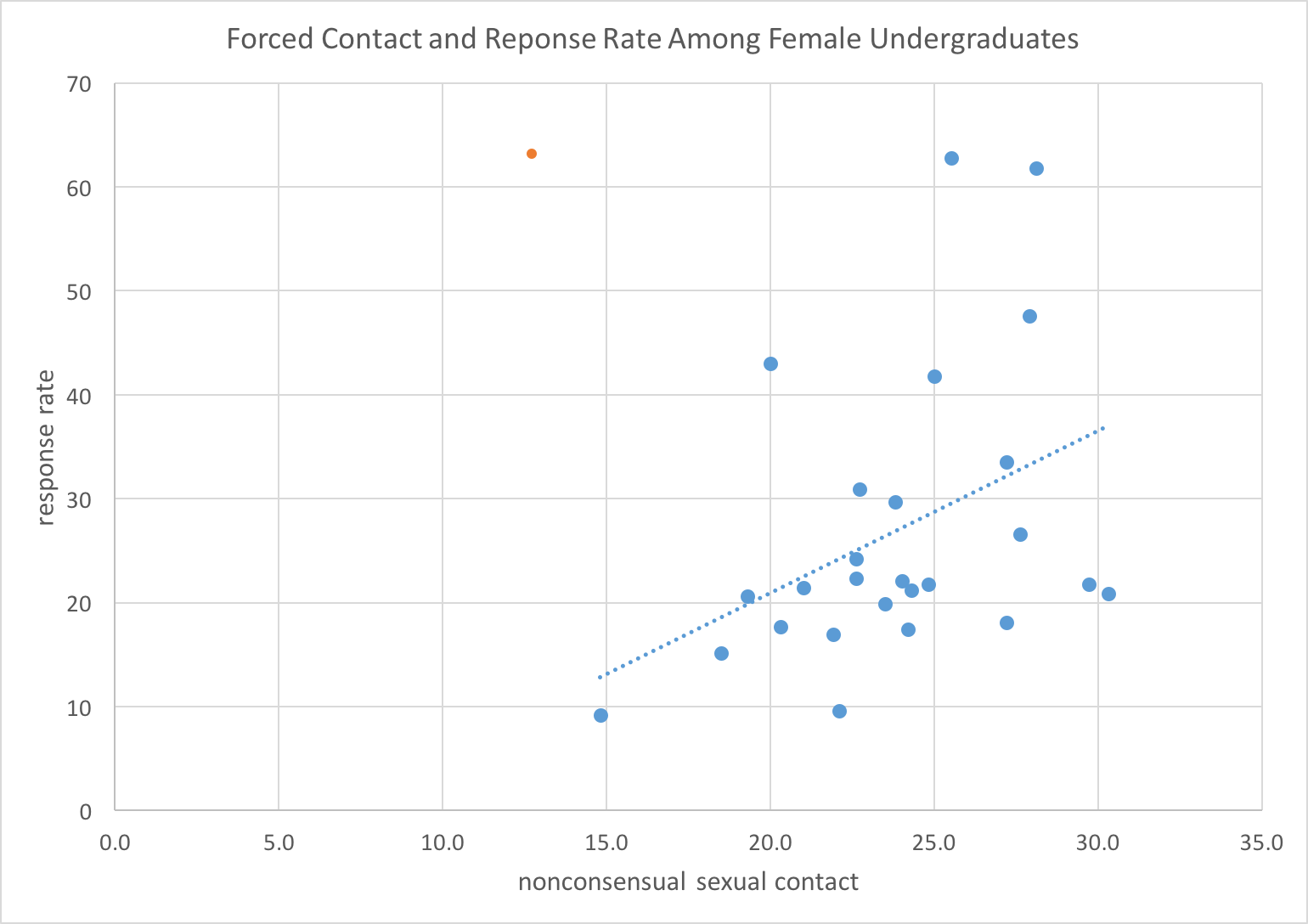

Interestingly if we take out Cal Tech, which appears to be an outlier, from the analysis the relationship becomes statistically significant -- in the opposite direction than would be predicted by Yoffe and similar critics. That is, as response rate goes up, so too estimates of nonconsensual sexual contact involving force or incapacitation for female undergraduates. (r=.41; p =.04). This is shown in Figure 2. I'm not sure it is wise to take out Cal Tech from the final analysis but I do note the Cal Tech data behaves and looks like an outlier statistically and thus it is important to understand how much of the analysis does or does not depend on that one outlier. As it turns out, either way we look at it, the analysis provides no support for the claim that lower response rates are associated with higher estimates of sexual assault in the AAU survey.

Figure Caption for Figure 2: Scatterplot of AAU survey response rate by estimate of rate of nonconsensual sexual touching for 27 institutions. The trend line depicted is based on data for 26 of the 27 schools after removing the outlier (which is depicted in a different color).

Conclusion

I agree with Yoffe and other critics that the AAU survey has limitations and we should be cautious about generalizing from it. We also should be promoting much better instruments - ones that are open-access and based on the best science such as the Administrator Researcher Campus Climate Consortium, or ARC3. However, critiques such as Yoffe's are fundamentally misstating the problem. There is no evidence that the AAU survey overestimates rates of sexual assault. It is time for this society to stop putting its energy into denying a very real problem. Instead let us accept the fact that the rates of sexual violence are way too high and work on fixing this terrible problem.