When you look up something on Google, you'll notice that the search engine tries to predict your quest by filling it in through autocomplete, which is based on technical and complex algorithms that are designed to determine what a user is trying to find. Google Instant is a functionality that's supposed to help people save time by typing less, but according to a new study from Lancaster University scholars Paul Baker and Amanda Potts, it's also got a very serious downside. They've presented their findings in the current issue of Critical Discourse Studies, published by Taylor & Francis.

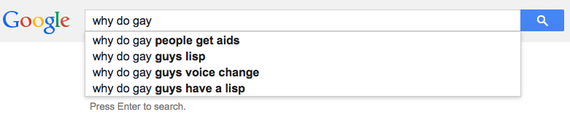

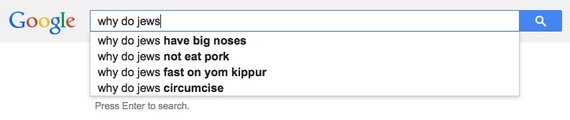

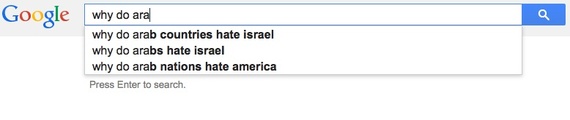

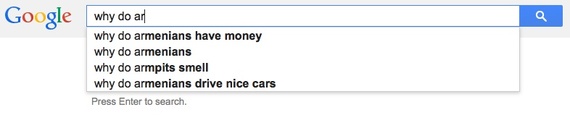

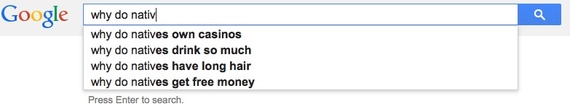

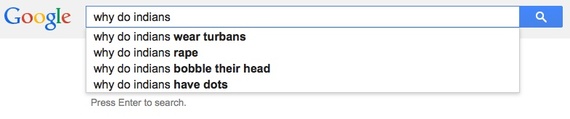

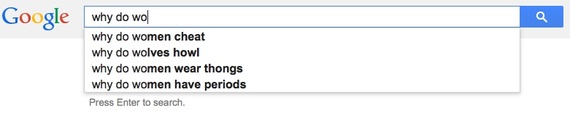

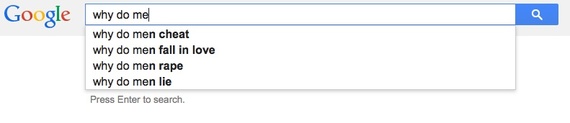

According to the study, Google Instant also appears to sustain racist, ethnic, and homophobic categorizations as a result of trying to save you some time. Just type "why do gays," for instance, and you'll see the results are pretty offensive. The same goes for other groups, from Arabs, Asians, and Armenians all the way through plain ol' men and women.

"Clearly these suggested questions appear because they are the sorts of questions that other people have typed into Google in the past with a relatively high frequency," write Baker and Potts. "It is also likely that once certain questions become particularly frequent, they will be clicked on more often (thus enhancing their popularity further) so they will continue to appear as auto-suggestions."

Baker and Potts continue to point out that it's we humans who have trained search engines to perpetuate these stereotypes, not the other way around.

"Interestingly, the 'control' category 'people' produced proportionally the most negative questions, which tended to be concerned with why people engaged in hurtful behaviors," they write. "However, there were also relatively high proportions of negative evaluative questions for black people, gays and males."

One of Baker's and Potts' main concerns is that there doesn't appear to be a way to report or object to these offensive autofills, and that users who stereotype others "may feel that their attitudes are validated, because the questions appear on a reputable search page such as Google."

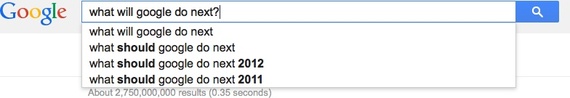

So what will Google do next? That remains to be seen.

(Originally posted on PopCurious.com. Read the full academic article: "Why do white people have thin lips?" Google and the perpetuation of stereotypes via auto-complete search forms over on Taylor & Francis.)