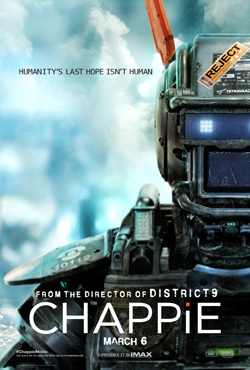

I was fortunate enough to be invited to a screening and press conference for the movie Chappie. It is a great movie about a police robot named Chappie that is downloaded with a program that makes him self-aware.

At the press conference, Neill Blomkamp, the director, script writer, and the guy who came up with the idea for the movie, said he wasn't sure if humanity could ever produce the type of artificial intelligence (AI) in Chappie. I was curious if that was possible myself.

In order to answer that question, I was able to interview the founder and director of the Visual and Autonomous Exploration Systems Research Laboratory at Caltech and at the University of Arizona, Dr. Wolfgang Fink. He is working on building an autonomous " planetary field geologist" robot for NASA.

Alejandro Rojas: How would you define an autonomous exploration system?

Dr. Wolfgang Fink: A truly autonomous exploration system is not controlled at all by humans. It may be given a charter, for example, "Go out there and explore a planet and then report back once you see something exciting." Humans always tell the robots what to do, so far, and that is the part where my lab tries to make a difference, so the robot or the system comes up with the decision where to go next and what to explore.

Rojas: So, coming up with the decision, or the reasoning aspect, would you call that artificial intelligence?

Fink: I would not, and that is very important. The term artificial intelligence refers to, over the last several decades, a largely rule based system, so called Mamdani type rules. What it means is if you encounter a certain type of situation, then react a certain way. If you encounter another situation, react another way. So if you have thousands of these rules in a system, then it looks like as if the system was intelligent looking from the outside in. However, the problem with such an approach is, while it is applicable and sufficient for certain tasks, especially tasks where we know everything that could happen, there are no surprises, but it doesn't lend itself to when there are surprises or a heavily changing dynamic environment.

In this case, with Chappie, for example, now you have a system that has been instilled with the capability of modifying itself, modifying its thinking and learning over time, and being influenced by its environment. Now that is the mark of an autonomous system, because at this point you cannot really predict anymore what trajectory or development the system will take in the future.

Rojas: So, you are saying that Chappie is more along the lines of using autonomous reasoning rather than artificial intelligence.

Fink: Yes. We have a nice comparison with the police robots, and Chappie was one of those. So the police robots, while they are highly automated, and many people would call them also autonomous, they are more governed by an artificial intelligence, because they have the law and order book internalized. They know if someone is wrongly parked, they give them a ticket, if someone pulls a gun, they pull a gun, and so they work according to certain schemes and a certain set of actions.

However, Chappie, in severe contrast to this, is not governed by the artificial intelligence rules. He is basically developing over time, being taught like a child. The culmination of which, at some point, he says, "I am Chappie." That is a huge statement, because that means at that point he becomes self-aware. That is sort of the highest order and the true mark of an autonomous system.

Rojas: Do you think self-awareness is possible?

Fink: Yes, I believe it is possible, but not with the current AI approaches. It has to be vastly different than that.

Rojas: Some people predict AI will exceed human capacity within 20 years. Do you think we are within that time frame, and should we worry about that?

Fink: It is a complex question. Let me try to break it down to some extent. There are systems which are faster than humans. They can go into places we cannot go, radiation environments, space, and so forth. That is all hardware. That can happen and happens already.

There are systems that can calculate quicker than humans. That is a given too.

We have systems that play chess better than humans, and that is only a number crunching exercise. Not to diminish those systems, but just to bring it down to the basics.

So, while that is impressive, it is not a threat to humanity. Where it becomes dicey is when you get a system which can move about and can take action, weaponized or not, and is able to react to its environment based on a non-deterministic algorithm. Meaning, it is not scripted. You cannot predict how the system is going to react. If you have such a system, which I think will be possible that it will happen in our lifetime, then, yes, it is something which is a threat to humanity. Especially, if you can't get control of it.

To read this interview in its entirety, visit my blog here.