The current outbreak of measles, on pace to become the largest since the disease was declared eliminated in the U.S. more than a decade ago, was made possible in large part by a single black mark in the medical research literature -- a discredited 1998 study from Dr. Andrew Wakefield that purported to link the measles, mumps and rubella (MMR) vaccine to autism.

The Lancet, the journal in which Wakefield's study appeared, pulled the study after investigations by a British journalist and a medical panel uncovered cherry-picked data and an array of financial conflicts of interest, among other trappings of fraudulent science. Wakefield, a British gastroenterologist, had gone as far as to pay children at his son's birthday party to have their blood drawn for the research. He had also collected funds for his work from personal injury lawyers who represented parents seeking to sue vaccine makers.

Despite the journal's retraction and Wakefield being stripped of his medical license in the U.K., the study still succeeded in generating fear and doubt about vaccines. The public health repercussions are still being felt today, as evidenced by the ongoing measles outbreak, which has affected more than 121 people, according to the latest numbers from the Centers for Disease Control and Prevention. A separate outbreak of mumps, another illness protected against by the MMR vaccine, is also emerging in Idaho and Washington state.

Wakefield isn't the only scientist to leave a legacy of discredited work and serious health threats -- although his case may be the most famous and the least ambiguous. The results of fabricated data and other forms of research misconduct often make their way into our policy and public discourse before they are identified and addressed within the scientific community. An analysis published this week in the journal JAMA Internal Medicine found that the U.S. Food and Drug Administration commonly identifies problematic research -- from the fraudulent to the mistaken -- during its systematic reviews of relevant studies, but rarely reports its findings to the publications in which the studies appeared. Simple sloppiness can result in damaging misinformation and misinterpretations, as can scientists exaggerating their findings in the hopes of gaining publicity or securing future funding. Then, too, there are mainstream journalists who may over- or under-emphasize certain aspects of new research, or who may not fully understand the science they're writing about.

Combine all of that with a population whose general grasp of science appears to be middling at best, and you have a recipe for an echo chamber of misinformation. "We don't have a particularly scientifically astute society," said Dr. Margaret Moon, a pediatrician and bioethicist at Johns Hopkins University. "We need to do a better job helping people understand good versus bad data."

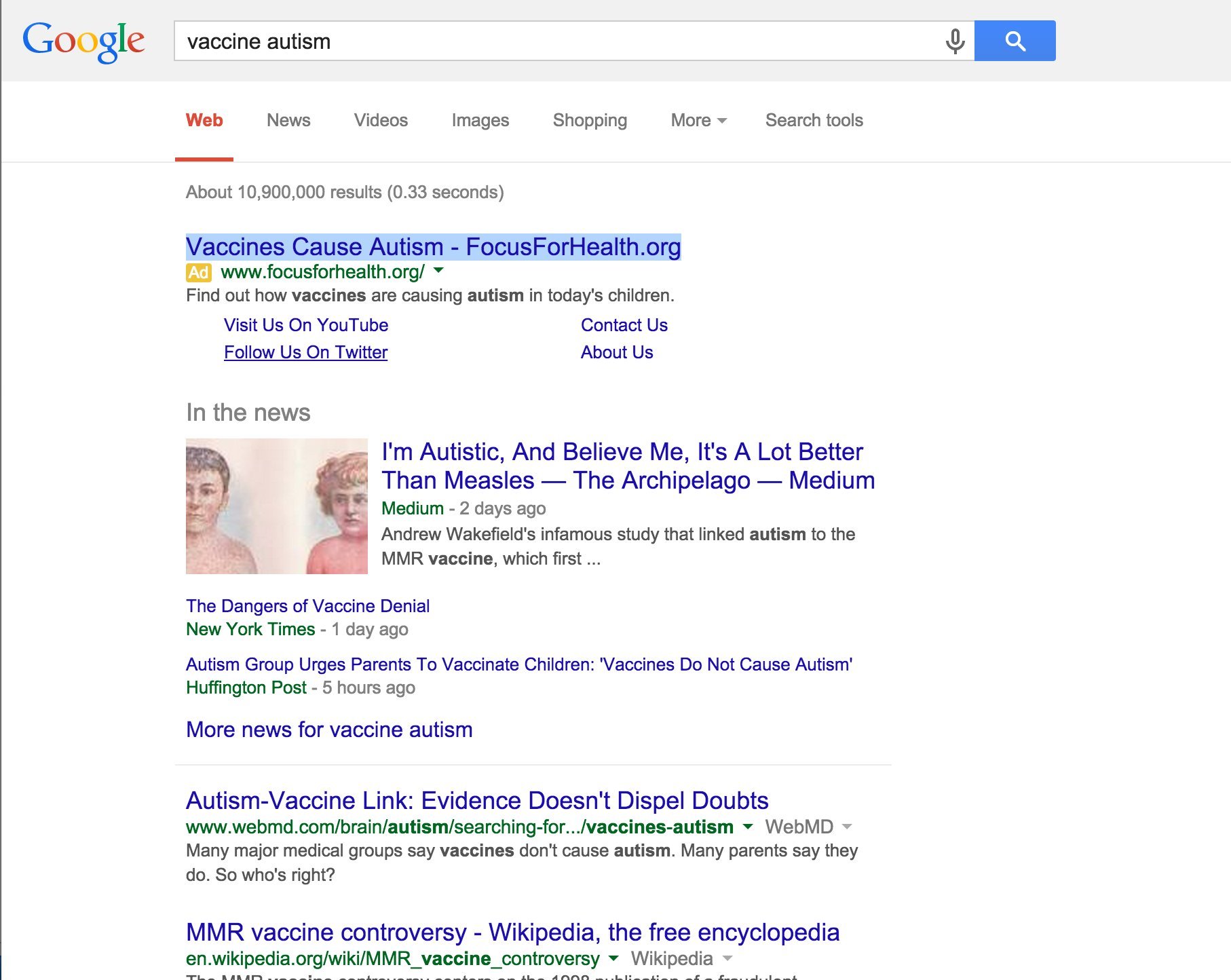

The Internet seldom helps the situation. Type "vaccine autism" into Google, and you'd think the jury was still out on the MMR vaccine. The first listed link, a paid advertisement, reads: "Vaccines cause autism." Other links concern an ongoing "controversy." For the record: Among scientists, there is no controversy. Vaccines are safe.

The case of the MMR vaccine and infectious disease is particularly clear-cut. But other questionable studies and findings have caused more insidious forms of harm that reveal how vulnerable we are to scientific dishonesty.

Take, for example, a widely covered review paper out of Stanford University that suggested organic foods don't provide greater nutritional value, or pose fewer health risks, than their conventional counterparts. That research, as The Huffington Post reported after its publication in 2012, was quickly questioned by experts in the field. Critics noted that some nutrients found in previous research to be more plentiful in organics were missing altogether from the Stanford findings. A paper like this -- that is, a review of existing scientific literature -- can be especially problematic, since the way the various studies are chosen, divvied up and combined can significantly alter any conclusions.

The Stanford study also reported that organic produce had a 30 percent lower risk of pesticide contamination compared to conventional fruits and vegetables. Not included in the publicly available abstract or press release, however, was the fact that pesticide residues were found in 7 percent of organics and 38 percent of conventional foods. In relative terms, that's a more impressive 81 percent difference. Critics also alleged that the Stanford authors downplayed findings of higher levels of omega-3's in organic products, as well as lower levels of antibiotic-resistant bacteria as compared to conventional foods.

Though academic dishonesty is relatively rare, there can be pressure on scientists and institutions to punch up findings in a bid for publicity.

"I think it's important that researchers don't overstate what they find," said Cynthia Curl, an environmental health scientist at Boise State University. She said she'd like researchers to "try to keep conclusions they make about their research within the confines of what they actually found."

"For consumers, it is hard to navigate," she said.

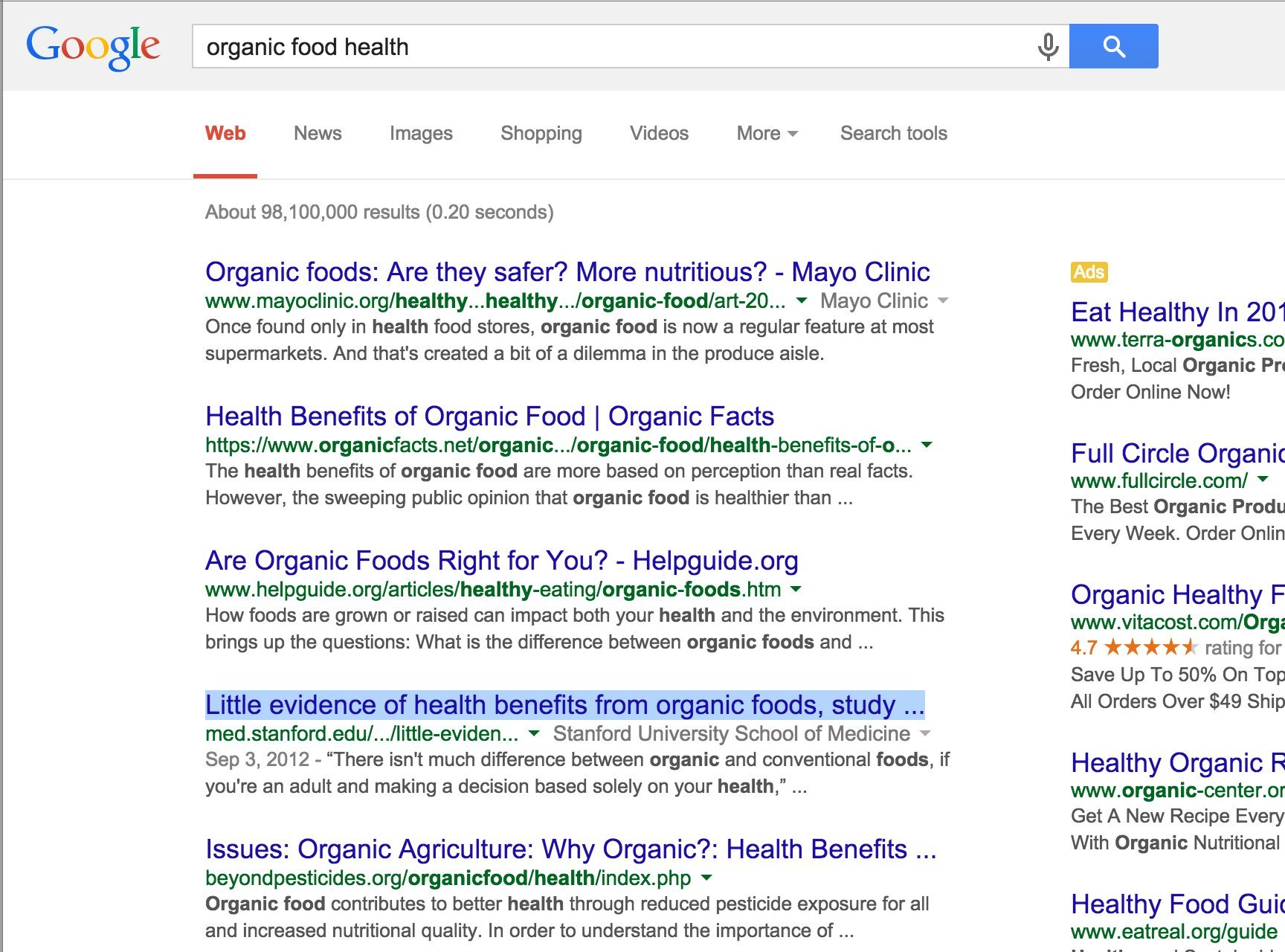

Today, a Google search for "organic food health" may also be misleading, since the Stanford study's press release appears near the top of the search results. "Little evidence of health benefits from organic foods," it reads. Below is a quote from the release: "There isn't much difference between organic and conventional foods, if you're an adult and making a decision based solely on your health."

Curl is an author of a study published last week that addresses the question of organic versus conventional. People who eat organic fruits and vegetables, she and her co-authors concluded, may have lower levels of a popular pesticide in their bodies compared to people who eat similar amounts of conventionally grown food.

"There's still a lot of controversy about what the potential benefits may be of eating organic," said Curl. "It sort of puts the onus on the public."

She added that the health benefits of eating fruits and vegetables -- whether organic or not -- generally outweigh the risks.

"Every time you go into the grocery store," she said, "you are having to make the choice: Is it worth the extra money to buy organic?"

It's not clear whether the authors of the Stanford study deliberately deceived the public, said Moon.

"There's a difference between the willful intent to deceive, and using data to persuade," she told HuffPost.

Moon also noted that study authors, and press offices, may inappropriately emphasize or overlook certain parts of the data or results. Among the criticisms of the Stanford study was the fact that the school received funding from the agricultural giants Cargill and Monsanto. The research team on the controversial study has asserted that none of that money went directly to their research.

Of course, whether a study offers specious information on purpose, or whether it does so accidentally, the result is the same.

"The fact is that, despite its mathematical base, statistics is as much an art as it is a science," the author Darrell Huff wrote in his influential 1954 book How to Lie with Statistics. "A great many manipulations and even distortions are possible within the bounds of propriety."

Perhaps no one understands this power better than corporations seeking financial gain.

"Industry is about five light years ahead of the scientists in their ability to use data to manipulate," said Moon. "They spend a lot of time and money figuring out how to do it."

Steering public perception and policy by distorting data was a common tactic in Big Tobacco's playbook, for example. That move has since been borrowed by some chemical manufacturers intent on prolonging their products' lives on the market. As industry leaders know, there's a degree of complexity and creativity inherent in all research methods, and often it's not difficult to come up with numbers that say what you want them to say. The impact of such misconduct can linger, even long after the tainted research has been debunked.

One strategy often used to obscure inconvenient truths is to water down the data. The 16 Cities Study of secondhand cigarette smoke, a federal government project that began in 1996, is a classic case. The study's authors concluded that smoking workplaces posed negligible exposures to non-smokers. Researchers who later re-evaluated the study, however, came to a different conclusion. In their follow-up, they noticed that the study's definition of a "smoking workplace" included buildings where smoking was restricted to designated areas, or where no smoking was actually observed. Reorganizing the data to account for the actual amount of smoking in the workplaces, the reviewing authors found that smoke-free workplaces would, in fact, significantly reduce secondhand smoke exposures for non-smokers.

The reviewers also noted the undisclosed involvement of the R.J. Reynolds Tobacco Company and the tobacco industry's Center for Indoor Air Research in the 16 Cities Study. The project, the reviewing authors concluded, had been "specifically conceived and designed to forestall regulation of workplace smoking."

Once again, the same data can tell very different stories depending on how the statistics are presented. "The numbers have no way of speaking for themselves," the author and statistician Nate Silver wrote in his 2012 book The Signal and the Noise. "We speak for them. We imbue them with meaning."

Several studies, including surveys of research on food products and secondhand smoke, suggest that when a third party has a hand in funding scientific research, it tends to influence the ending of that story -- whether the scientists involved are conscious of it or not.

Devra Davis, president and founder of the research and public policy organization Environmental Health Trust, has suggested that past research concerning cell phone radiation involved similarly manipulative methods. In one 2011 study, financially supported by the cell phone industry, European researchers determined that kids who averaged one or more weekly cell phone calls over a period of at least six months were not at an increased risk of developing a brain tumor, compared to peers who were non-users. But Davis and other experts have argued that no one could reasonably expect to find a link, given such limited cell phone use and such a short time frame. Brain cancer can take decades to develop.

"You end up with results inconclusive by design," Davis told HuffPost.

There is no shortage of scientific data in the world today. Nor is there any shortage of people with stakes in how all that data is created, analyzed and interpreted. Finding better ways to distinguish between honest and faulty science is therefore a matter of growing interest. For example, Retraction Watch, a blog that tracks retractions of scientific papers, is keeping an eye out for such threats -- and warning of their potential downstream effects.

Only one to three papers per 10,000 published are ever retracted, according to one 2010 study. But as Retraction Watch reports, many more cases of scientific misconduct are "swept under the rug."

Moon also noted a growing scrutiny of the peer-review process, especially where financial conflicts of interest may be involved. As some recent examples have shown, corporate money can be funneled into the pockets of academic researchers -- unbeknownst to the public, or even to other scientists.

While the great majority of scientific researchers are honest people trying to do good work, Moon acknowledged that there are other scientists who are not.

"That's a fact," she said. "The good news is that science is set up to find these things. But is it set up to find them before damage is done? No way."