Nate Cohn argues some automated polls are no better than guessing. Americans are divided over President Obama's timeline for Afghanistan. And bad first pitches can make for a good chart. This is HuffPollster for Thursday, May 29, 2014.

'WHEN POLLING IS MORE LIKE GUESSING' - Nate Cohn: "Election analysts and forecasters depend on accurate polling. Unfortunately, there’s not much of it so far this cycle. Many of the surveys to date have been conducted by firms that use automated phone surveys and combine deficient sampling with baffling weighting practices….last week, one automated polling firm, Rasmussen Reports, released a survey of likely Georgia voters that was significantly younger than one would expect for a midterm election. A hefty 34 percent were 18 to 39, while voters over age 65 represented just 17 percent of likely voters….There were other peculiarities in the Rasmussen survey. Voters of some 'other' race — neither white nor black — represented 12 percent of the sample, which would smash the record 8.7 percent of voters who were of 'other' race in 2012. More realistically, 'other' voters might be expected to represent 5 to 7 percent of voters this November. On the other hand, just 24 percent of voters were black, a number likely to be too low. Rasmussen Reports said that it considered state turnout estimates, along with census demographic data and exit polls, but did not comment on the demographics of the Georgia sample." [NYT]

Is the difference due to the sampling frame? Cohn: "In contrast with most polls conducted by news media organizations, which collect random samples of adults and then weight to census demographic targets, most automated firms call from lists of registered voters and therefore do not collect a random sample of adults. As a result, they do not weight to census targets for adults, and instead weight to estimates for the likely composition of the November electorate. That approach can be effective if the pollster can accurately model the electorate, as the Obama campaign did in 2012. But low-cost automated polling firms often operate with only a few staff members, who seem to have less capability to accurately estimate the composition of the electorate….Although polls from such firms may still be useful for broad-stroke characterizations, like 'competitive' or 'not competitive,' they may not be reliable for precise measurements of public opinion."

...Probably not - Cohn frames his argument around the contrast between automated and live interviewer polls, but as MassINC pollster Steve Koczela points out via Twitter, "most of [his] objections are about bad weighting choices rather than IVR as a mode." And Cohn is wrong when he asserts that "most" automated polls rely on voter lists while "all or nearly all" media polls rely on random digit samples. First, Rasmussen, the second most prolific of the automated pollsters so far in the 2014 cycle, has always used random-digit-dial sampling, combined with a likely voter screen, to its do pre-election polling (some of Rasmussen's issue polls now report on all adults). Perhaps more important, a long list of state level media pollsters now relies on samples drawn from registered voters lists to conduct live interviewer polls. These include (but are not limited to) California's Field Poll, the University of Cincinnati/Ohio Newspaper Poll, as well as the election polls conducted by WBUR/MassInc, Chicago Tribune/WGN, Morning Call/Muhlenberg College, the Civitas Institute, Franklin & Marshall College, Hampton University, the University of Florida and Suffolk University. [@skoczela, @Nate_Cohn]

And not all random-digit sample polls are created equal - HuffPost's Natalie Jackson: "Disclosure of sampling & weighting varies widely among even pollsters who use random-digit-dial (RDD) sampling—is it really enough to say 'weighted to Census'? There are many ways to weight to Census parameters, different characteristics to use in the weighting, and different software programs and other ways of calculating the weights. Then, are the weights trimmed, meaning they are only allowed to be so high or so low? Another question is how accurate the Census parameter is at any given time since the Census is only done every 10 years, with other sample surveys in between. Which Census estimates are being used? Beyond the weighting issues, we rely on self-reports for voter registration plus any number of features for likely voter models that are self-reported and known to be subject to social desirability bias."

AMERICANS DIVIDED OVER OBAMA'S AFGHANISTAN TIMETABLE - Emily Swanson: "Americans are divided over President Barack Obama's plan to pull almost all U.S. troops out of Afghanistan by the end of 2016, according to a new HuffPost/YouGov poll, but few want a commitment longer than the one the president has proposed. Obama announced Tuesday that he plans a gradual drawdown of troops that would leave about 10,000 troops in Afghanistan by the end of this year, cut that in half by the end of 2015 and remove most remaining troops by the end of 2016. Thirty-one percent of Americans in the new poll said the U.S. should stick to that timeline, while 35 percent want to withdraw all troops even sooner. Only 20 percent of Americans, though, said they want a commitment of U.S. forces in Afghanistan 'as long it takes to accomplish [U.S.] goals.' In fact, more Americans (40 percent) think it was a mistake to send troops to Afghanistan in the first place than think it wasn't (36 percent)." [HuffPost]

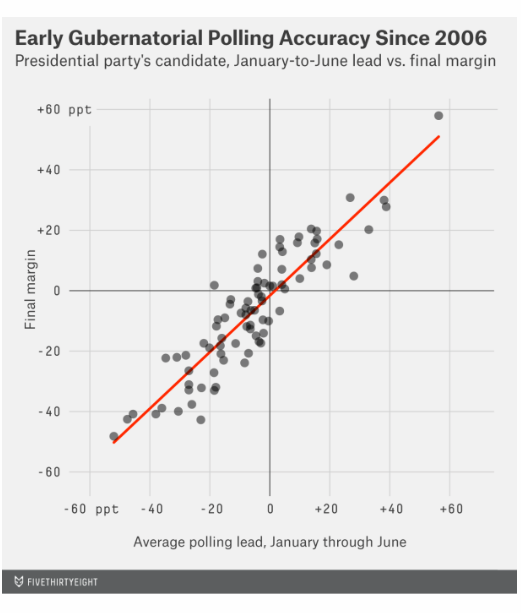

EARLY GOVERNOR'S RACE POLLING IS ACCURATE TOO - In an article predicting that Republicans will retain their edge in governorships, Harry Enten finds early polls to be just as predictive in Governor's races as in Senate contests: "Early gubernatorial polling is, like early Senate polling, fairly predictive of who will win in the fall. I collected polling data for gubernatorial elections since 2006...I used the same averaging technique as I did in my article on senatorial elections, which is similar to the average RealClearPolitics applies. Of the 84 races since 2006 with available data, the average error between early polling and the election results was just under 7 percentage points. The average error for gubernatorial polling at the end of the campaign in 2012 was 4.4 points, so we’ll gain some accuracy as the election nears, but not a huge amount. Perhaps more impressively, the candidate leading in early polling has won 91.6 percent of the time." [538]

MAYA ANGELOU'S LESSON FOR MARKET RESEARCHERS - From the writer's 2002 autobiography, A Song Flung Up To Heaven, recounting her time as a door-to-door market researcher: "'Good morning, I am working for a company that wants to improve the quality of the goods you buy. I'd like to ask you a few questions. Your answers will ensure that you will find better foods in your supermarket and probably at a reduced price.' The person who wrote those lines, for interviewers to use with black women, knew nothing of black women. If I had dared utter such claptrap, at best I would have been laughed off the porch or at worst told to get the hell away from the woman's door….I knew that a straight back and straight talk would get the black woman's attention every time. 'Good morning. I have a job asking questions.' At first there would be wariness. 'What questions? Why me?' 'There are some companies that want to know which products are popular in the black community and which are not.' 'Why do they care?' 'They care because if you don't like what they are selling, you won't buy, and they want to fix it so you will.' 'Yeah, that makes sense. Come on in.'" [A Song Flung Up To Heaven, h/t Fran Featherston/AAPORnet]

HUFFPOLLSTER VIA EMAIL! - You can receive this daily update every weekday via email! Just click here, enter your email address, and and click "sign up." That's all there is to it (and you can unsubscribe anytime).

THURSDAY'S 'OUTLIERS' - Links to the best of news at the intersection of polling, politics and political data:

-ACA approval has remained relatively static since the end of open enrollment. [Gallup]

-PPP (D) puts Jeff Merkley (D) well ahead of opponent Monica Wehby in the Oregon senate race. [PPP]

-Wenzel Strategies (R) finds Mitch McConnell (R) leading Alison Lundergan Grimes (D) within the margin of error. [Wenzel]

-Rasmussen gives Tom Cotton (R) a 4-point lead over Mark Pryor (D). [Rasmussen]

-Only 30 U.S. House races look genuinely competitive, according to the Washington Post's Election Lab model. [WashPost]

-Aaron Blake notes the wide gender gap in opinions of Hillary Clinton. [WashPost]

-Democrats hope political branding can help them in the Senate this fall. [NYT]

-Americans are more worried by "global warming" than "climate change." [NYT]

-Justin Wolfers uses noise in GDP data to illustrate the perils of relying on economic indicators in election forecasts. [NYT]

-Pollster John Della Volpe talks about millennial voting with Taegan Goddard. [The Week]

-The Census announced this week that it will begin counting same-sex spouses as married couples. [Pew Research]

-Manuel Pastor traces declining Latino identification on the Census to a change in how questions were asked. [HuffPost]

-Nate Silver and Allison McCann look at what your name says about your age. [538]

-Unlike most of the rest of the country, Wisconsin has 2.7 times the number of bars as grocery stores. [Flowing Data]

-Christopher Ingraham charts the worst ever first pitches. [WashPost]