Just a few days ago, I moderated a webinar with four leading researchers and statisticians to discuss the use of margin of error with non-probability samples. To a lot of people, that sounds like a pretty boring topic. Really, who wants to listen to 45 minutes of people arguing about the appropriateness of a statistic?

Who, you ask? Well, more than 600 marketing researchers, social researchers, and pollsters registered for that webinar. That's as many people who would attend a large conference about far more exciting things like using Oculus Rift and the Apple Watch for marketing research purposes. What this tells me is that there is a lot of quiet grumbling going on.

I didn't realize how contentious the issue was until I started looking for panelists. My goal was to include 4 or 5 very senior level statisticians with extensive experience using margin of error on either the academic or business side. As I approached great candidate after great candidate, a theme quickly arose among those who weren't already booked for the same time-slot - the issue was too contentious to discuss in such a public forum. Clearly, this was a topic that had to be brought out into the open.

The margin of error was designed to be used when generalizing results from probability samples to the population. The point of contention is that a large proportion of marketing research, and even polling research, is not conducted with probability samples. Probability samples are theoretical - it is generally impossible to create a sampling frame that includes every single member of a population and it is impossible to force every randomly selected person to participate. Beyond that, the volume of non-sampling errors that are guaranteed to enter the process, from poorly designed questions to overly lengthy complicated surveys to poorly trained interviewers, mean that non-sampling errors could have an even greater negative impact than sampling errors do.

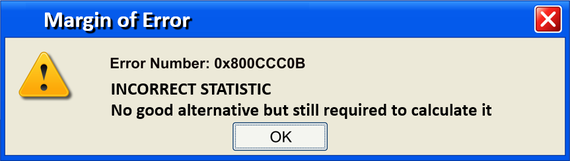

Any reasonably competent statistician can calculate the margin of error with numerous decimal places and attach it to any study. But that doesn't make it right. That doesn't make the study more valid. That doesn't eliminate the potentially misleading effects of leading questions and skip logic errors. The margin of error, a single number, has erroneously come to embody the entire system and processes related to the quality of a study. Which it cannot do.

In spite of these issues, the media continue to demand that Margin of Error be reported. Even when it's inappropriate and even when it's insufficient. So to the media, I make this simple request.

Stop insisting that polling and marketing research results include the margin of error.

Sometimes, the best measure of the quality of research is how transparent your vendor is when they describe their research methodology, and the strengths and weaknesses associated with it.

Annie Pettit, PhD is the Chief Research Officer at Peanut Labs, a company that specializes in self-serve sample, surveys, and polling. She is also Vice President, Research Standards at Research Now. Annie specializes in data quality, sampling and survey design, and social listening. She won the MRIA Award of Outstanding Merit in 2014, Best Methodological Paper at ESOMAR in 2013, and the 2011 AMA David K. Hardin Award.