Democrats weren't the only losers on Election night. As has been reported, pollsters also had egg on their face.

The first sign that things had gone awry was when we learned that the Virginia Senate race was too close to call. How could this be given that most polls had incumbent Senator Mark Warner leading his Republican challenger Ed Gillespie by an average 9 points? In the end, Warner held on to win by a very narrow margin. But unfortunately for pollsters, this race was just the tip of the iceberg. As the night wore on, in race after race, the election polls proved not just to be wrong, but biased in favor of the Democrats.

Steven Shepard cites several examples of races in which the polls badly underestimated the extent of the GOP victory. In addition to Warner-Gillespie race, these include the Senate races in Kentucky, Iowa, Arkansas, Georgia, Colorado, and Kansas, as well as the gubernatorial race in Maryland.

According to Nate Silver, Senate polls conducted in the last three weeks of the campaign "overestimated the Democrat's performance by 4 percentage points." Likewise, gubernatorial polls "overestimated Democrat's performance by 3.4 points."

This has prompted widespread questions regarding whether we can trust the polls going forward? What about 2016? Does what happened in the midterm (and in 2012 for that matter) signal the end of election polling?

The short answer is not really, reports of the death of election polling are -- to misquote Mark Twain -- 'greatly exaggerated'. Election polling is big business and it isn't going anywhere anytime soon. Nor should it. Pre-election polling can be useful and should continue, but at the same time it has got to be understood for what it is and reported properly. Without getting too much into the statistical weeds, here's why no one should be surprised by what happened on election night.

Why we shouldn't be surprised that the polls were wrong:

1. Polls don't measure behavior well

One of the smartest introductory books on polling is Earl Babbie's "Survey Research Methods." While the book is now dated, as far as the basics of polling, it is still on-point. Babbie discusses polling as one of several methods we use to collect information about the social world (others include experiments, focus groups, content analysis, etc...). And like all of these other methods, there are things polling does well and things it doesn't do so well. Among the things it does well - capturing opinions and attitudes. Among the things it doesn't do so well - measuring behavior. If you think about it, this shouldn't be a surprise. At its most basic, polling involves asking people questions and recording their responses. While you can ask respondents anything - and in many cases they will answer -- the responses they give aren't equally as valuable. While people can tell you clearly and honestly (we hope) their opinions and attitudes, behavior is a different ball game. When it comes to what they have done in the past, people will sometimes lie about behavior that seems unacceptable (e.g.. taking drugs or previously voting for a now unpopular politician). But beyond that, people also forget and misreport quite innocently. And when it comes to future behavior, while people might intend to do something and say that honestly, life goes on and they sometimes are unable to do what they say they will for many reasons.

So back to the election polls -- unlike most other types of polling, this is by design, fraught with difficulty because it is predicated on behavior. Namely -- is the respondent going to vote or not? Since polls don't measure behavior particularly well, election polling starts out at a distinct disadvantage.

2. Election Polls are interested in a different population -- likely voters

Unlike most other types of surveys which focus on the entire adult population, election polls are interested in a narrower population that is difficult to capture -- likely voters. And the question pollsters have always confronted, is how do you identify this population and how do you sample it? Throughout the decades they have come up with a variety of ways to target this population and they should be applauded for that, it is not easy task. But it also shouldn't be a surprise that the results are off as often as they are on. Most other types of surveys use probability sampling to capture attitudes and opinions (i.e., one in which everyone in the population has an equal chance of being selected to participate).

This type of randomness (or semi-randomness) is what we have learned over the years makes polls statistically reliable. Unfortunately, election polls cannot rely on probability sampling because when it comes to election polls we are only interested in a narrower population -- likely voters. As a result pollsters try as best as they can. Once they reach a potential respondent they immediately screen for future behavior -- will they go to the polls? Are they a likely voter? But as much as the methods of identifying likely voters have been perfected over the years, there is no exact scientific, full-proof way to do this. Polls are not a good judge of behavior, particularly future behavior, which is key in the electoral context.

3. Weighting

One of the ways in which pollsters try to make sure their sample is representative of the population is by weighting. In the simplest terms weighting works as follows: if the sample includes 3 percent African-Americans but it is estimated that African-Americans count for 9 percent of the population, the pollster will "weight" the responses of their African-American respondents so they count three times as much. The goal is noble, but the activity itself is fraught with challenges and uncertainties. Among the most common concerns, how do we know if the African-Americans sampled represent accurately the views and attitudes of all African-Americans? What if the African-Americans in the sample were more libertarian than most African-Americans in the population? How might that change or bias the results?

4. Non-response and other forms of bias

Not only do pollsters have to contend with the challenges specific to election polling, they are also subject to the challenges that all pollsters face. So on top of the difficulty of trying to identify likely voters, they also have to contend with other sources of bias - such as non-response bias. Non-response bias is the bias that occurs when the responses of those who participated in the survey don't accurately match the responses of those who declined to take part. This problem has arguably gotten more pronounced as the number of Americans with landlines has decreased and reliance on cell phones have increased. But non-response bias is only one difficulty all pollsters face. Bias can also be introduced as a result of problematic question wording (e.g., leading questions -- questions which prompt a respondent to answer in one way; double-barreled questions -- questions which are really asking two things at once and leave a respondent confused and the pollster with unreliable information, etc...). The short of it is, election pollsters try as much as possible to minimize all forms of bias, those that are specific to election polling and those that are more common in all forms of survey research.

5. History of Polling - Inaccuracies occur regularly

We shouldn't be surprised by what happened during the 2014 midterm because this is part of the history of election polling, It is accurate just about as much as it is inaccurate. As Silver notes, the "type of error" we saw last in the 2014 midterm is "not unprecedented."

instead it's rather common... a similar error occurred in

1994, 1998, 2002, 2006 and 2012....That the polls had relatively

little bias in a number of recent election years --including 2004, 2008

and 2010 -- may have lulled some analysts into a false sense of security

about the polls.

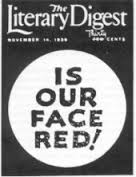

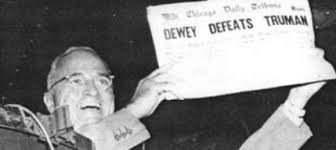

The history of election polling, going back to the infamous Literary Digest poll and the 1948 "Dewey Defeats Truman" debacle, is testament to the fact that what happened this time around is not unique. It is also testament to the fact that after each incident the polling community has done careful analysis to try to correct the problem. The self-reflection that is occurring now will undoubtedly impact how election polling is done in 2016. That doesn't mean polls leading up to 2016 will be full-proof or completely accurate and that is the point, election polling by definition will never achieve that kind of

perfection - it can't.

How we can make sure this doesn't happen again?

The bottom line is we can't. Inaccuracies and errors are part of the nature and essence of election polling, it will be off almost as much as it's on. But that said, we can do a better job reporting results and putting them into context. Unfortunately, polls have become politicized. When the results of pre-election polls don't reflect the interests of one side or another they often bemoan them. We saw this in the polls leading up to 2012 which many Republicans claimed were biased or "skewed". They were right, the polls were skewed, but what they failed to note is that they were 'skewed' in their direction. Similarly, in the lead up to 2014 many Democrats complained the polls were biased and once again, they were right. But what they failed to acknowledge was that this time, the bias was in their favor.

The lesson is, we need to take what interested parties on both sides say about the polls with some skepticism. Their interests will always outweigh their objectivity. Instead, reporters and pollsters need to be clear in their discussions of poll results that election polls bring with them special challenges which make them as accurate at times as they are inaccurate at other times. To their credit pollsters do their best to correct these inaccuracies and inherent biases, but they will never be able to do so completely.

Years ago when I was training to be a pollster I had the chance to work with the legendary Bud Roper, son of one of the fathers of public opinion polling Elmo Roper. By the time I met him, he was semi-retired and devoting a lot of his life to continuing not only to perfect his craft but to help teach a new generation of pollsters. One of the things I remember Bud saying over and over again was polling "is as much art as it is science, probably a bit more art". That is particularly true of election polling. For all the statistics and science behind it, polling like all forms of social research is a human endeavor and as such it will seldom be perfect or 100 percent accurate. As with most things human, we are working within a margin, doing the best we can to get close. That is also what makes it an exciting and ever changing, worthwhile endeavor, particularly when it is consumed and understood for what it is.